Abstract Editor's Note: This article was originally compiled by WeChat public account “thejiangmenâ€. If you need to reprint, please reply “Reprint†in WeChat public account to obtain permission. Translation...

Translator: Qu Xiaofeng, Ph.D. Student Personal Research Homepage, Hong Kong Polytechnic University Human Biometrics Research Center: http://

Some people say that artificial intelligence (AI) is the future, artificial intelligence is science fiction, and artificial intelligence is also part of our daily lives. These evaluations can be said to be correct, depending on which type of artificial intelligence you are referring to.

Earlier this year, Google DeepMind's AlphaGo defeated South Korea's Go Master Li Shiyi. Artificial intelligence (AI), machine learning, and deep learning were all used when the media described DeepMind's victory. These three played a role in the process of AlphaGo defeating Li Shizhen, but they are not the same thing.

Today we use the simplest method - concentric circles, to visually demonstrate the relationship and application of the three.

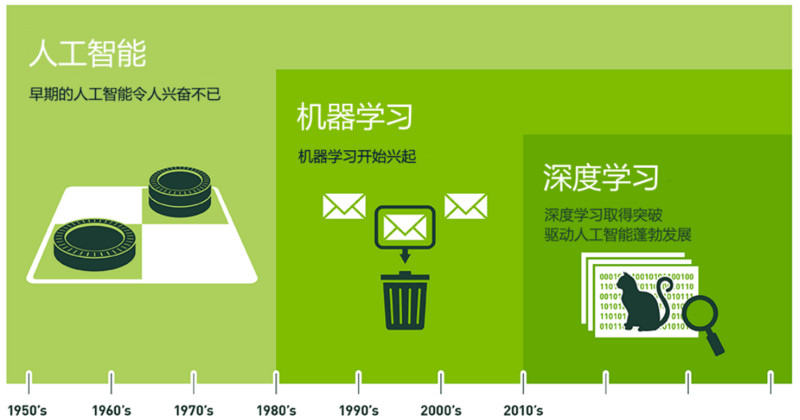

As shown above, artificial intelligence is the earliest and largest concentric circle, followed by machine learning, a little later; the innermost is deep learning, the core driver of today's artificial intelligence explosion.

In the 1950s, artificial intelligence was once extremely optimistic. Later, some smaller subsets of artificial intelligence developed. First is machine learning, then deep learning. Deep learning is a subset of machine learning. Deep learning has had an enormous impact like never before.

From the conception to the prosperity

In 1956, several computer scientists gathered at the Dartmouth Conferences to present the concept of "artificial intelligence." Since then, artificial intelligence has been lingering in people's minds and slowly hatching in research laboratories. In the decades that followed, artificial intelligence has been reversed at the poles, or as the prophecy of the dazzling future of human civilization; or thrown into the garbage heap by the madness of being a technological madman. Frankly, until 2012, the two sounds still existed at the same time.

In the past few years, especially since 2015, artificial intelligence has begun to explode. Much of this is due to the widespread use of GPUs, making parallel computing faster, cheaper, and more efficient. Of course, the combination of infinitely expanded storage capacity and sudden bursts of data torrents (big data) has also led to a massive explosion of image data, text data, transaction data, and mapping data.

Let's take a quick look at how computer scientists have evolved artificial intelligence from the earliest signs to support applications that are used by hundreds of millions of users every day.

Artificial Intelligence - the intelligence that gives the machine

King me: The process of playing international checkers is a typical application of early artificial intelligence. In the 1950s, there was a wave of enthusiasm. (Translator's Note: After the international checkers reach the bottom line position, they can become kings, and the king chess pieces can move backwards).

As early as the summer of 1956, the pioneers of artificial intelligence dreamed of using the computers that had just emerged to construct complex machines with the same essential characteristics as human intelligence. This is what we now call "General Artificial Intelligence" (General AI). This omnipotent machine has all our perceptions (even more than people), and all our rationality can be thought like us.

People always see such machines in movies: friendly, like the C-3PO in Star Wars; evil, like the Terminator. Strong artificial intelligence is still only in movies and science fiction. The reason is not difficult to understand. We can't achieve them yet, at least not yet.

What we can achieve now is generally called "Narrow AI." Weak artificial intelligence is a technology that can perform a specific task, just like people, even better than people. For example, image classification on Pinterest; or Facebook face recognition.

These are examples of weak artificial intelligence in practice. These technologies implement some specific parts of human intelligence. But how are they implemented? Where does this intelligence come from? This brings us to the inner layer of the concentric circle, machine learning.

Machine learning - a way to implement artificial intelligence

Spam free diet: Machine learning can help you filter (mostly) spam in your email. (Translator's Note: The word spam in English spam comes from the United States' luncheon meat brand SPAM in the United States during World War II. Until the 1960s, British agriculture has not recovered from the loss of World War II, so it imported a large amount from the United States. This cheap canned meat product is rumored to be not very tasty and flooding the market.)

The most basic approach to machine learning is to use algorithms to parse data, learn from it, and then make decisions and predictions about events in the real world. Unlike traditional software programs that solve specific tasks and hard code, machine learning uses a large amount of data to "train" and learn how to accomplish tasks from data through various algorithms.

Machine learning comes directly from the early days of artificial intelligence. Traditional algorithms include decision tree learning, derivation logic planning, clustering, reinforcement learning, and Bayesian networks. As we all know, we have not yet achieved strong artificial intelligence. Early machine learning methods could not even achieve weak artificial intelligence.

The most successful application area for machine learning is computer vision, although it still requires a lot of manual coding to get the job done. People need to manually write the classifier and edge detection filter so that the program can recognize where the object starts and where it ends; write the shape detection program to determine whether the object has eight edges; write the classifier to recognize the letter "ST-OP ". Using these hand-written classifiers, one can finally develop an algorithm to perceive the image and determine if the image is a stop sign.

This result is not bad, but it is not the kind of success that can make people feel good. Especially in the case of cloud days, the sign becomes less visible, or it is blocked by the tree, the algorithm is difficult to succeed. This is why, some time ago, the performance of computer vision has not been able to approach human capabilities. It is too rigid and too susceptible to interference from environmental conditions.

As time progressed, the development of learning algorithms changed everything.

Deep learning - a technology for machine learning

Herding Cats: Finding pictures of cats from YouTube videos is the first demonstration of outstanding performance in deep learning. (Translator's Note: herdingcats are English idioms, taking care of a group of cats who like freedom, do not like to tame, used to describe the situation is chaotic, the task is difficult to complete.)

Artificial Neural Networks is an important algorithm in early machine learning that has gone through decades of ups and downs. The principle of neural networks is inspired by the physiological structures of our brains, which are intertwined with neurons. But unlike any neuron in a brain that can be connected within a certain distance, the artificial neural network has discrete layers, connections, and directions of data propagation.

For example, we can slice an image into image blocks and input them to the first layer of the neural network. Each neuron in the first layer passes the data to the second layer. The second layer of neurons also performs a similar job, passing the data to the third layer, and so on, until the last layer, and then generating the result.

Each neuron assigns a weight to its input, and the correctness of this weight is directly related to the task it performs. The final output is determined by the sum of these weights.

We still use the Stop sign as an example. Break all the elements of a stop sign image and then "check" with neurons: the shape of the octagon, the red color of the train, the prominent letters, the typical size of the traffic sign, and the still motion Features and more. The task of the neural network is to give a conclusion as to whether it is a stop sign. The neural network gives a well-thought-out guess based on the weight of ownership—the “probability vectorâ€.

In this example, the system might give the result: 86% might be a stop sign; 7% might be a speed limit sign; 5% might be a kite hanging on a tree and so on. The network structure then tells the neural network whether its conclusions are correct.

Even this example is relatively advanced. Until recently, the neural network was still forgotten by the artificial intelligence circle. In fact, in the early days of artificial intelligence, neural networks existed, but the contribution of neural networks to "smart" was minimal. The main problem is that even the most basic neural networks require a lot of computation. The computational requirements of neural network algorithms are difficult to meet.

However, there are still some devout research teams, represented by Geoffrey Hinton of the University of Toronto, who insist on research and realize the operation and proof of concept of parallel algorithms aiming at super-calculation. But until the GPU was widely used, these efforts only saw results.

Let's look back at this example of stop sign recognition. The neural network is modulated and trained, and it is still very error-prone from time to time. What it needs most is training. Hundreds or even millions of images are needed for training until the weights of the input to the neurons are modulated very accurately, whether it is foggy, sunny or rainy, and the correct results are obtained each time.

Only at this time can we say that the neural network has successfully learned a stop sign; or in the Facebook application, the neural network has learned your mother's face; or in 2012, Professor Andrew Ng was at Google has implemented neural networks to learn how cats look and so on.

Professor Wu’s breakthrough lies in the significant increase in these neural networks. The number of layers is very large, and there are so many neurons, and then the system inputs massive amounts of data to train the network. Here at Professor Wu, the data is an image from 10 million YouTube videos. Professor Wu added “deep†to deep learning. The "depth" here is the many layers in the neural network.

Now, image recognition through deep learning training can even be better than humans in some scenarios: from recognizing cats, to identifying early components of cancer in the blood, to identifying tumors in MRI. Google's AlphaGo first learned how to play Go and then played chess with it. The way it trains its own neural network is to constantly play chess with itself, repeat it underground, and never stop.

Deep learning, the future of artificial intelligence

Deep learning enables machine learning to implement a wide range of applications and expand the field of artificial intelligence. Deep learning has ruthlessly fulfilled various tasks, making it seem that all machine-assisted functions are possible. Driverless cars, preventive health care, and even better movie recommendations are all in sight or near.

Artificial intelligence is now, just tomorrow. With deep learning, artificial intelligence can even reach our imaginative science fiction. I took your C-3PO, and you have your Terminator.

Electric Pallet Stacker,Straddle Stacker,Semi Electric Stacker,Pallet Stacker For Sale

Guangdong Gongyou Lift Slings Machinery CO.,LTD , https://www.wmpallettruck.com